- Category: Process

Tampering with food produce at source level remains the single greatest threat to secure provisions of Food (food security).

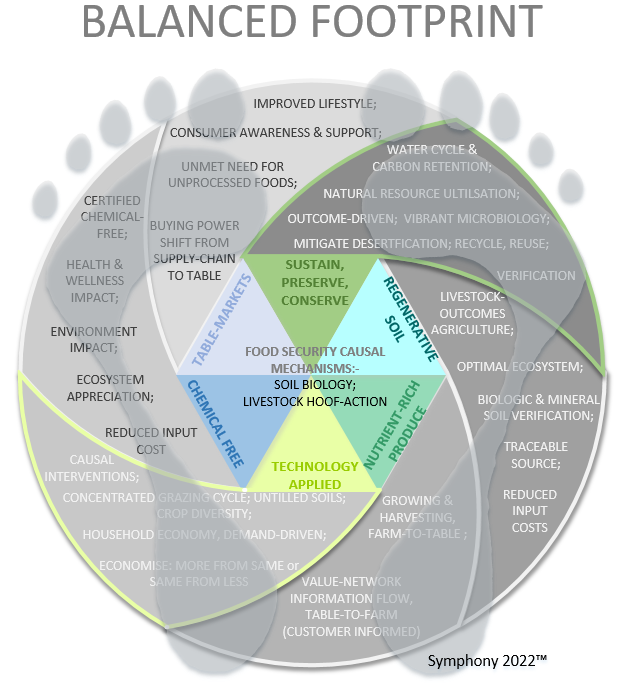

Balanced Footprints based on Qualitative metrics, are core to the future of sustainable agriculture and food security. Single footprints favour a specific context or requirement; thus an incomplete indicator to evaluate a healthy ecosystem.

Balanced Footprints based on Qualitative metrics, are core to the future of sustainable agriculture and food security. Single footprints favour a specific context or requirement; thus an incomplete indicator to evaluate a healthy ecosystem.

Functional performance is essential to balance both Ecology and the farm Enterprise perspectives; beginning at a micro-level where the circumstances and conditions are known and controlled, then building towards a reliable solution.

Too many imperfect interventions cause a negative impact on basic outcomes such as health and wellness.

World Economic Forum argues for a footprint to remedy climate change by limiting CO2 gas emissions. Their ideal footprint depicts an irrational strategy that treats the symptom and not the cause. No wonder wherever WEF principles are followed their outcomes are dismal as they miss points in causation:-

- Natural resources respond aptly when a balanced footprint exists at micro-level between both the Enterprise and Ecology contexts. The enterprise must sustain itself over the long-term under competitive market rivalry in an attempt to meet customers’ needs while ensuring a continuum of adequately maintained resources. Ecology must be at an optimal functional level, committed to support whatever the food source requires in terms of minerals and soil biology, thus contributing to a vibrant ecosystem. When either of the two delicate footprints are disrupted through unproven human interventions, the outcome has an unfavourable impact on both footprints, resulting in food insecurity and subjecting the ecosystem to further depreciation. Harsh consequences follow a continuous regiment of human interventions in nature and agriculture;

- Attention cannot simply focus on Carbon emissions. When soil degradation occurs there are other key drivers involved in cause and effect such as ineffective rainfall, exogenous interventions and financial incentives. Treating a correlated symptom is the issue, rather than negating ill-causes and ineffective practices. The cause and effect of treating symptoms is yet another inflationary contributor;

- Fossil fuels remain integral to provision of basic energy supplies that stimulate economic growth and cap cost of living. The real crunch is that Energy production constitutes a substantially lower threat to the Ecology, food security and health than equivalent chemical-based inputs (fertilisers, pesticides, preservatives and processed additives). To cease administering chemicals requires more in understanding customer informed requirements (Table to Soil) than following a change-over procedure conversion. The motivation for source producers to cease ill-practices, lies within the Table-market to express its unmet needs for uncontaminated, nutrient dense produce. Such health and wellness driven unmet needs are fixed to a life style change, very different to a short-term fad. The message to producers is a signal to build retail relationships and to grow their target market with wholesome produce. Those who counter argue with economies of scale and plague spoilages, are doing so from an unbalanced footprint context;

- There is no natural law that supports their climate change theory which is only supported by correlated variables and a likelihood of association. To be clear, correlation does not indicate a cause. The root lies squarely in events of inappropriate management. Creation is groaning, worldwide there are floods and natural disasters – yet funding generic infrastructures that are intended to remedy one problem often turn to yield an unexpected outcome. Changing the course of a dry river bed and building a town downstream on its original path, spells for disaster (Laingsburg, January 1981). Management decisions and dictated Policy that override a deep understanding of cause and effect are doomed to fail. It is the duty of markets and producers to allow appropriate flows of information to restrain exogenous organisations and consultants from implementing ill-advised policy. The cause and effect has a long-term negative bearing;

- Natural News published a report that scientists are yet to refute. The report questions fundamental physical principles of the greenhouse gas theory which challenges the conventional climate dictum that carbon dioxide (CO2) acts as a greenhouse gas with unique warming properties. Based on an 1827 publication, the hypothesis states that water vapour and CO2 act as a shield which prevents infrared radiation (IR) from escaping into outer atmosphere. The hypothesis applied a 200-year old technology of spectrographic analysis to derive that dipole gases absorb infrared radiation, hence the theorem of global warming. The Allmendinger research tested gas properties through thermal absorption technology as opposed to spectrographic wave absorption and found no greenhouse capabilities within CO2, O2, N2, or Ar gases. “As a consequence, a ‘greenhouse effect’ does not really exist, at least not related to trace gases such as carbon dioxide.”

https://www.naturalnews.com/2022-07-28-greenhouse-gas-effect-does-not-exist.html

This week, the Dutch government is pushing to buy up some 3,000 farming production units and to liquidate all livestock to reduce carbon emissions. Netherlands is the world’s second largest technology driven agriculture powerhouse and supplies west-European countries annually with 4 million cattle, 13 million pork and 104 million poultry carcasses, a primary source of supply

This week, the Dutch government is pushing to buy up some 3,000 farming production units and to liquidate all livestock to reduce carbon emissions. Netherlands is the world’s second largest technology driven agriculture powerhouse and supplies west-European countries annually with 4 million cattle, 13 million pork and 104 million poultry carcasses, a primary source of supply

https://www.telegraph.co.uk/world-news/2022/11/28/netherlands-close-3000-farms-comply-eu-rules/

- Category: Process

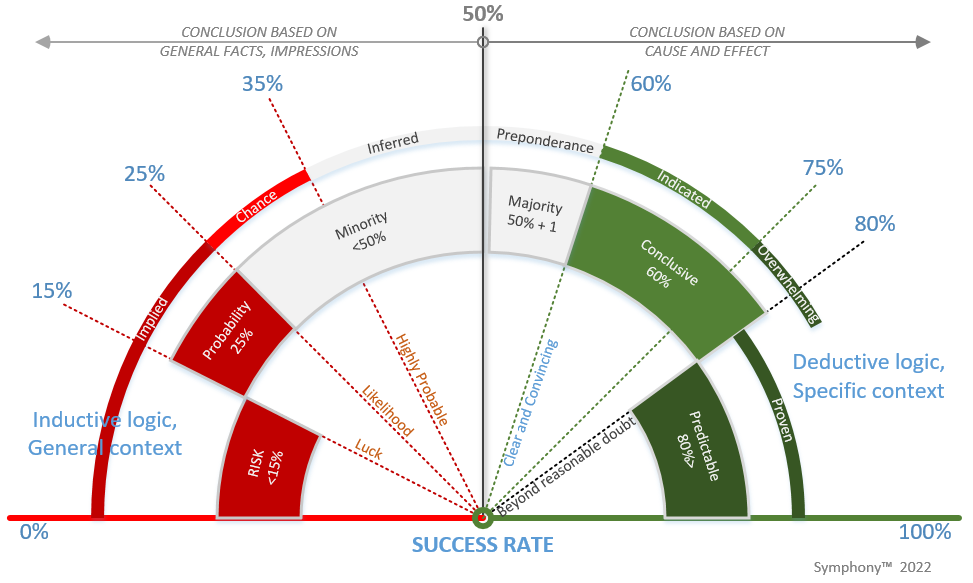

Success is paramount to every business and defines business acumen. Everything about a Brand and its Reputation stands on its ability to replicate its own success. Without a positive track record that indicates a proven methodology, trust and confidences become sceptical. But there is a deeper issue that underpins Success and business leadership should make every effort to shift internal paradigms accordingly. The issue centres on how information is gleaned, categorised and concluded enough so as to inform strategic decisions and provide context. Conclusive information is paramount to success, yet business leaders would rather normalise ‘failure’ and accept its daily presence than make the effort to validate and verify assumptions. The point being that business literature normalises an unfocussed behaviour in failures commensurate with irrational decisions as part of ‘lessons learned’ and ‘learning and growing’. The root cause rests in business leadership’s acceptance of mediocre information, compounded by an unwillingness to move from quantitative measurements and historic data benchmarks to uncovering qualitative metrics based on customer-criteria.

The common failure is that executives ready themselves into accepting failure as an ‘acceptable norm’, yet leadership does not act to replace ill-logic (circle arguments, uninformed), generalised assumptions (everyone knows that …) and intuitions (non-functional impressions, look-and-feel) with practices aimed at deriving sound information, verified conclusions and improved decision-making. A common failure would be to develop and launch a product based on a good idea without knowing which needs exist that are not being fulfilled, what customers perceive as an unmet need, what functional elements are of high-importance to their intended outcome, and whether there are factors within the value position that would remedy a specific segment’s low-satisfaction. Without a deeper understanding of customer criteria, the rate of success will continue to range below 50% as dismal innovations continue to market dysfunctional products, services and offerings with the false hope that clever marketing will recover a measure of success. The emphasis has moved from a prerequisite informed and conclusive position to an untenable risk based on inconclusive hope and luck. No matter how strong the internal competences are (ability, capacity, execution) the value system cannot correct the fundamental flaw when decisions are based on inconclusive information.

Business are quick to adopt a strategy, campaign or initiative simply based on Probability, Likelihood or Chance of success, thinking unrealistically that the odds will favour their expected outcome. Driving their motivation is a pre-conceived, amorphous vision which promises to catapult the organisation into a favourable competitive position, where perceived success blinks brightly. When the information base is weak and the defined success rate band signals an alarming ‘inconclusive’ warning commensurate with ‘Risk’, then failure is imminent. Knowing that band of ‘Probability’ represents less than a 25% rate of success, why would any competent leadership accept the low integrity of assumptions and allow the enterprise to position itself in such a precarious quadrant? The answer lies squarely in watered-down definitions that we have come to accept through normalisation and a perceived lack of alternatives, instead of sounding alarm bells leadership justifies a likely ‘probability’ that rings a plausible ‘good enough’.

Business acumen and sound-logic demands for rational assumptions and base-information to be tested vigorously. This ensures an informed relevance in terms of specific circumstances and conditions that impact customer needs, and not the enterprise itself. Based on this ‘customer paradigm’ a series of ‘customer-informed conclusions’ is formalised into ‘success-rate bands’ that business leaders can apply to test validation and verification. The presence of ‘success-rate bands’ in decision-making, is a disciplined business leader’s armour that ensures accurate, relevant and conclusive information before committing any resources, thus a guide to separate noise from factual data. The quality of decision-making will improve as staff members and teams adopt the approach with greater understanding. This sets the foundation for the organisation to move from micro quantitative measurements and ratios to qualitative metrics that render desired outcomes, a fundamental basis for Success.

What should interest our business leaders, is at what cut-off level they should set their conclusive acceptance and whether they set a universal band of acceptance throughout the enterprise. Realising the imperative function that thorough conclusions can effect, would make business leaders more attentive to the true definition of the bands and to steer leadership away from depending on sub-standard conclusions.

Here are some categories where Success is most certain:-

- Predictable conclusions are top-of-the-class yielding in excess of 80% success rate. Proven and Overwhelming evidence-based conclusions are derived from an understanding of causation through a normative process that is capable of rendering a predictable outcome. Enterprises that focus on developing their strategic decisions in the ‘Predictable’ band will typically ensure that customer criteria are known and very specific to each market segment’s prevailing circumstances and conditions. The certainty of being customer informed, overrides any generalised conclusion or assumption pertained to unmet needs. By definition, the basis of factual information is ‘beyond reasonable doubt’ and precisely where competitors fail to keep up. This band affords an edge towards a unique and valued position, hence its certainty;

- Clear and Conclusive conclusions fall in band between 60% and 80% success rate. Conclusions are indicative by definition, the only differentiating factor is that customer criteria can not be met fully by current internal value creation required to render increased levels of satisfaction. Yet the success rate is still excellent and most gratifying, functional performance will improve as the firm develops its competences and enhances value creation with greater precision and accuracy (sustained development trajectory);

Here are some categories where Failure is most certain:-

- Preponderance and general consensus. Although most criteria are revealed to the firm’s intelligentsia, they are unlikely to depart from their traditional habits. Many successful organisations insist on pinning their success rate on amorphous ideas that have no root cause to remedy market fault lines nor do they resolve customer issues, deficiencies, obstacles and shortcomings.

In the eyes of most enterprises, they have spotted a gap and will keep on repeating the formula. The problem is their learning and growing accomplishments are pinned on trial and error which places their trusted approach in a non-existent ‘grey zone’ between success and failure;

- Probability is the antonym of Predictability and covers the bottom-end of the Success rate chart. With the advent of supply-driven enterprises (commencing after the Industrial revolution) surpluses needed to be monetised to plough back in working capital. This gave rise to the Industry of Marketing based on motivation and probability. Normalising a phenomenon of ‘you have a rubbish product but we’ll move it’ resulted in the acceptance of a sub-standard probability.

During the era of Household economies prior to the Industrial revolution, products and services were not pushed into markets, hence the origin of the old saying ‘good wine needs no bush’. The result of a supply economy is that ideas are converted into products without proof of demand criteria. It has since become dependent upon marketers to devise a value proposition telling markets what they need! Household economies were customer-informed and the value proposition was definitive prior to product development.

If business success was dependent upon the toss of a coin, every enterprise would at least stand a 50/50 chance. The statistical reality is far less, young businesses face a success-rate of less than 15%; the coin was replaced by dice and committed to roll a ‘6’. No wonder more than 90% of newly registered companies fail over the short-term, their ‘informed conclusions’ and decision-making were all probability-based;

- ‘Luck’ is something every Board wishes for but the success-rate band indicates less than 15% rate of success, in fact it amounts to ‘Risk’. When making the decision, every factor of consideration is based on Risk and the assumptions are systemically flawed, evidences are unsupported and, if nothing else, negligence prevails. If not for an uncontrolled, unforeseen exogenous factor or event (e.g. competitor collapse, cancelled order, supply chain closure …) ‘success’ would never have prevailed. Factually, there was no foreknowledge of success indicated from a knowledgeable decision or internal perspective; none of the business inputs could possibly contribute to the expected outcome; it could only be derived due to uncontrollable external circumstances and conditions.

This should explain why ‘luck’ is not a bankable credit for management but rather a ‘get-out-of-jail’ card. Firms who depend upon such free interventions, are no more than Pirates who operate in the ‘Implied conclusion’ band with less than a 25% success rate;

- ‘Risk’ covers the lowest end of probable conclusions. Many a claim of being ‘risk-averse’ is seen as a sign of weakness, yet it is a function of success – mitigate Risk by seeking conclusive evidences, thus becoming customer-informed. Risk-centric organisations are nothing more than adrenalin junkies who wager capital resources based on whims and intuitions, without due consideration of consequences. Many a good-idea and visionary management has no substance relative to market requirements and unmet needs, nor is there any evidence of an understanding of market fault line issues, deficiencies, obstacles and shortcomings.

Investors should stay clear and rather look for customer-informed businesses who understand unmet customer-needs.